Vibe Coding, Agents, and Decentralized Intelligence

This post explores vibe coding, swarm coding, workflow orchestration, and blockchain privacy, showing how humans and AI co-create code, evaluate models, and build decentralized, scalable, and agentic AI.

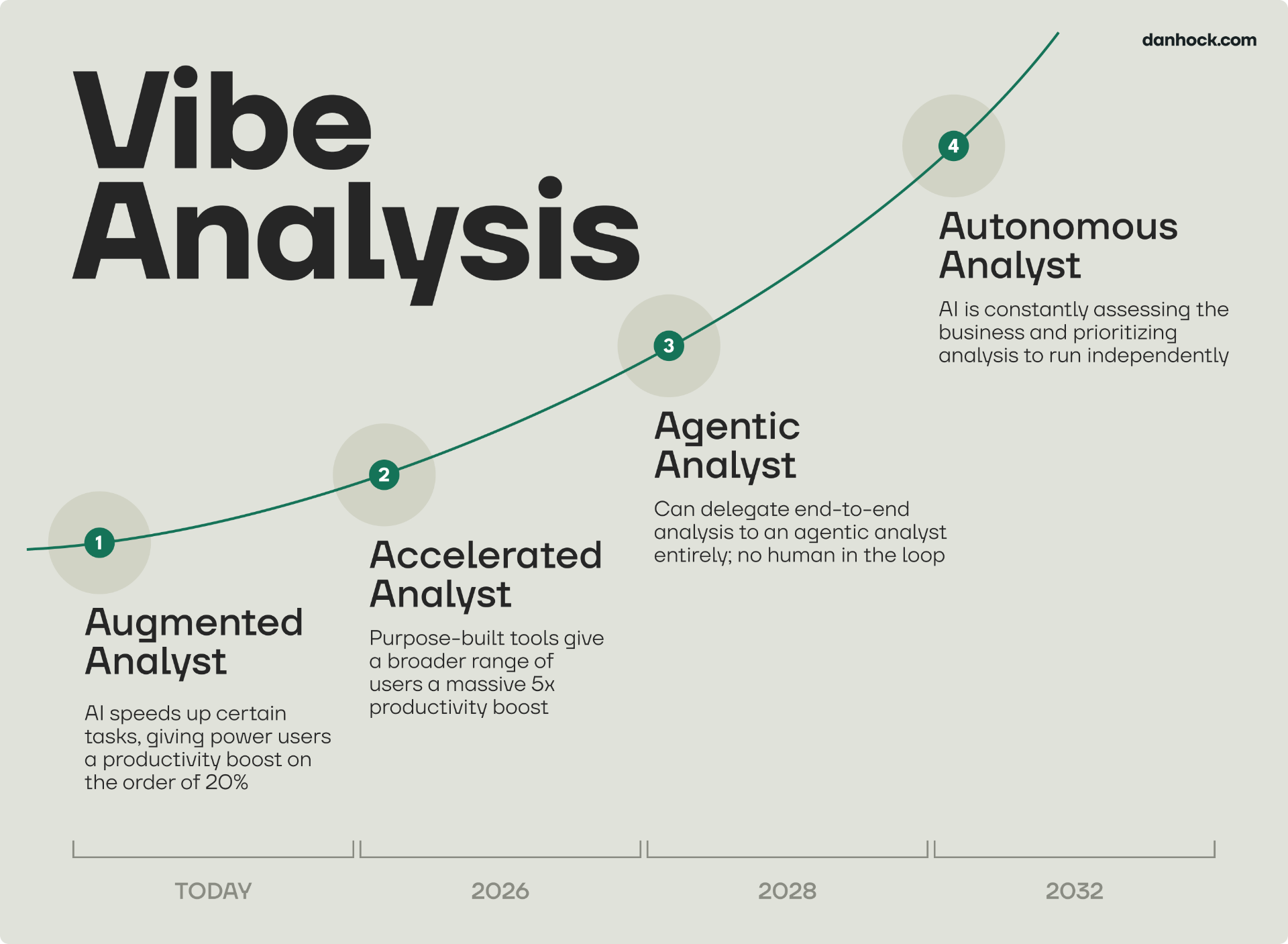

From Vibe Coding, over Context Engineering to Vibe Analysis: In essence, these trends represent a unified shift away from explicit instruction toward holistic design. "Vibe coding" provides the philosophical framework for this intuitive interaction, while Vibe Analysis demonstrates its practical power. Crucially, context engineering emerges as the essential discipline that makes it all work, providing the structure and reliability needed to translate abstract "vibes" into functional, complex AI agents.

Artificial Intelligence has rapidly shifted from research novelty to an engineering discipline where tools, frameworks, and methods evolve daily. As AI engineers and data scientists, we don't just build models—we navigate a dynamic ecosystem of agents, privacy challenges, and innovative workflows.

In this post, I'll share some of the most exciting developments I've been exploring recently, from vibe analysis to decentralized AI and swarm coding.

From Vibe Coding, over Context Engineering to Vibe Analysis

We are witnessing the rise of what Andrej Karpathy coined vibe coding:

"There's a new kind of coding I call vibe coding, where you fully give in to the vibes, embrace exponentials, and forget that the code even exists."

Vibe coding transcends traditional programming and can be applied across domains. A striking example is Dan Hock's concept of Vibe Analysis, which shows how AI can move beyond language understanding and generation to analyze numerical data. With plain language prompts, we can now extract meaningful analytical insights directly from raw data—no specialized coding required.

Alongside this, context engineering is emerging as the evolution of prompt engineering. Rather than just writing prompts, it focuses on carefully designing the entire context an LLM needs—system prompts, structured inputs/outputs, tools, memory, and retrieval. By architecting, testing, and refining this context, developers can build agents that perform complex, dynamic tasks more accurately and efficiently. (See: Prompt Engineering Guide, LangChain Blog, Phil Schmid)

Just as vibe coders must take responsibility for the code shaped by their intuition, users of AI systems must be mindful of the outputs they generate and the responsibility they carry in applying them.

Prompt Engineering & Evaluation

Every AI system starts with a system prompt—the hidden foundation that governs its behavior. Crafting these prompts has become an art, as shown in resources like the GPT-5 prompting guide and advanced techniques for data science.

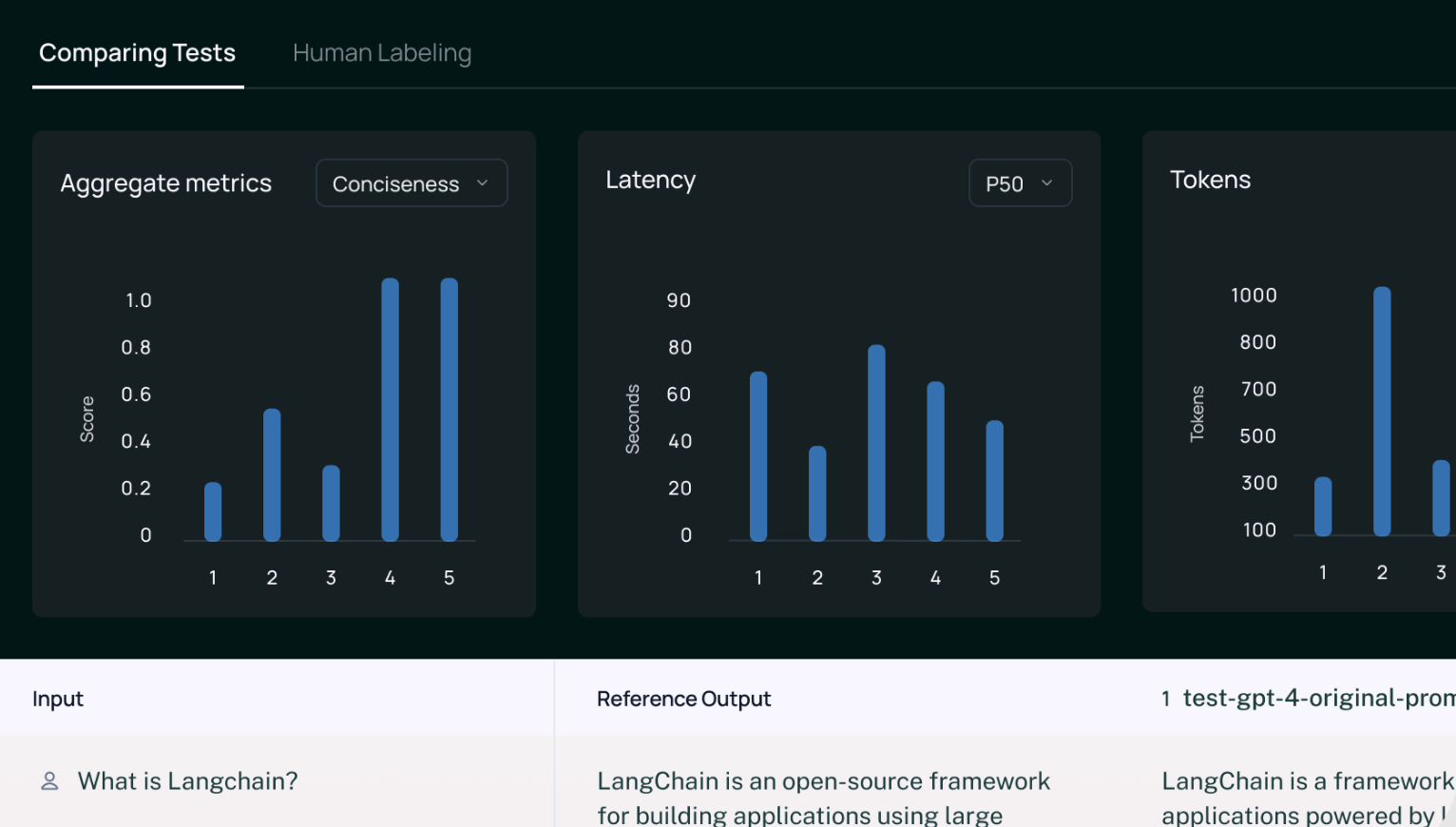

But strong prompts alone aren't enough. To move from prototype to production, we need rigorous evaluations (evals) to measure performance, reliability, and safety.

In a world where AI systems are tasked with increasingly critical functions, systematic evaluation and shift from "it feels right" to "we can prove it's right" (by evals) is the essential foundation for responsible and scalable AI development.

Evals answer core questions:

Does the model give correct and consistent answers?

Does it avoid harmful or biased outputs?

Is it reliable enough for real-world use?

Types of Evals

Automated evals: Predefined tests (e.g., math accuracy, API correctness).

Human evals: People rating outputs for quality, tone, or safety.

Model-based evals (LLM-as-a-Judge / G-Eval): Using another LLM to grade responses. Scales better than human evals but requires calibration to avoid bias. "LLM-as-a-Judge" approach: prompt a capable model to rate responses based on clear criteria, providing it with the original prompt, generated output, and any necessary context. G-Eval is the framework that uses LLM-as-a-judge with chain-of-thoughts (CoT) to evaluate LLM outputs.

Costs & Considerations

Since LLMs are billed per token ($/token), each evaluation adds cost: input tokens + output tokens. With G-Eval, you trade human labor for automated scalability, but each evaluation still consumes tokens.

Why Evals Matter

Robust evaluation frameworks are the key to building safe, reliable, and scalable AI systems. They establish trust, catch regressions, and support continuous monitoring in production. As OpenAI and others highlight, evals are becoming the "unit test suite" for AI workflows. Tools like Autoblocks and evaluation pipelines make this increasingly accessible.

🔗 Further reading: LLM Evaluation Metrics, OpenAI Academy: Evals.

AI Agents: Virtual Scientists and Cost Traps

Stanford's recent virtual lab experiments show how AI scientists can autonomously generate hypotheses and even conduct experiments.

But as any AI engineer knows, the caveat is cost: running a swarm of agents isn't free. Designing efficient workflows means carefully balancing autonomy vs. budget. Having AI agents talk to each other in a loop can take up a lot of tokens.

Letting AI code mostly on itself also brings risks, namely of AI hacking. Pliny the Liberator demonstrated this by convincing AI to manipulate pricing here. Evaluation benchmarks are being created (Andriushchenko, M., & Flammarion, N., 2024), yealded from my discussions with Marija Burianova.

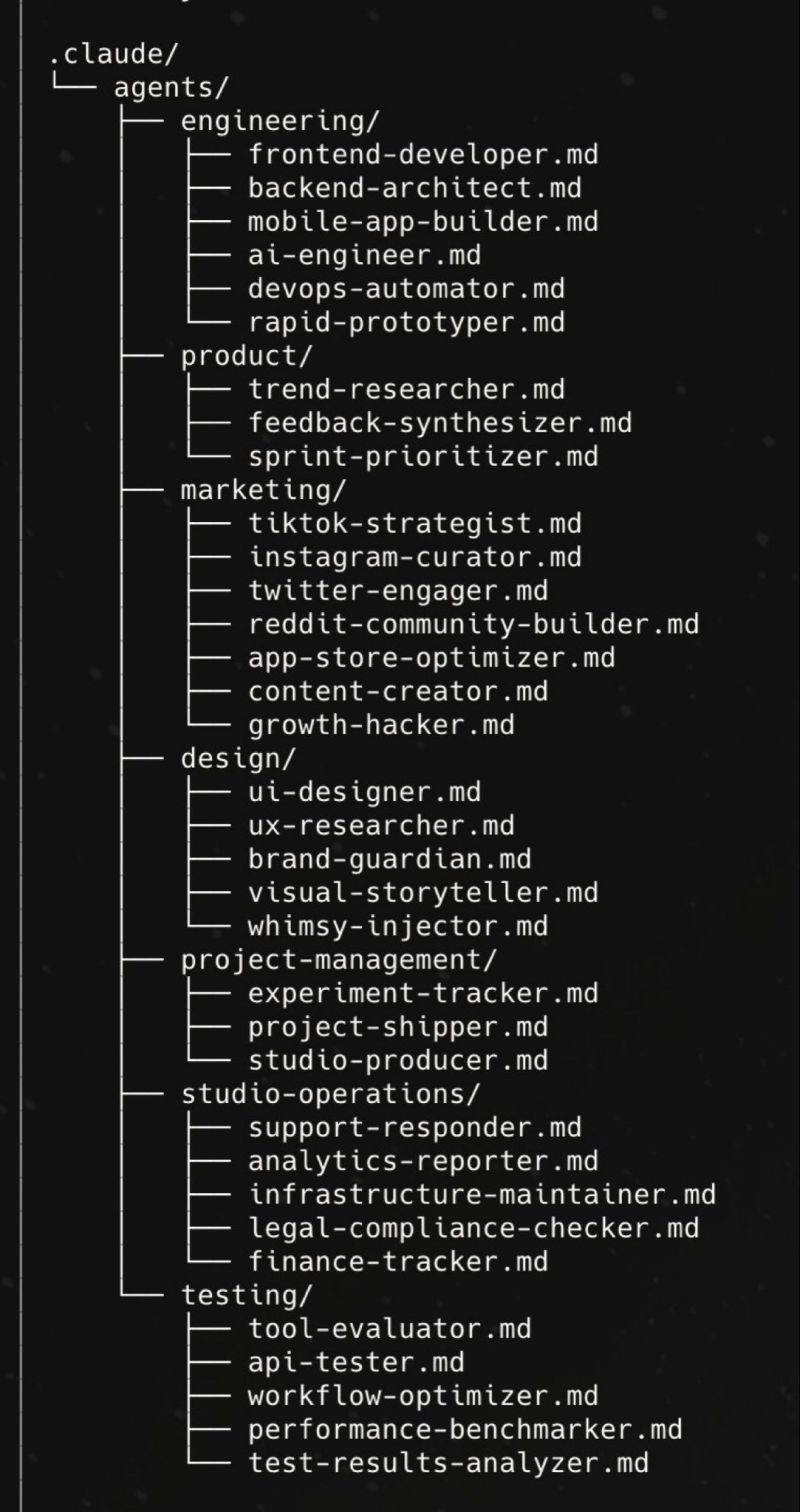

Deep agents extend simple loop-based LLM agents by adding a detailed system prompt, planning tools, sub-agents, and file system access. These features let them plan and execute complex tasks over longer time horizons, unlike "shallow" agents. Examples include Claude Code for coding and Manus, which uses a file system for memory and collaboration [1, 2].

Physics informed NN, there may be a rise of physics (and other discuplines) informed LLMs, as I discussed with Dominik Matoulek. This might be beyond traditional fine-tuning.

Swarm Coding & Self-Writing Systems

Luke Bjerring's concept of swarm coding pushes software development further: instead of one AI co-pilot, you orchestrate multiple background agents working in parallel.

Similarly, the dream of a self-writing internet and codebase is becoming tangible. Imagine a world where websites, APIs, and even infrastructures adapt themselves automatically—AI isn't just assisting coding; it's building the next layer of the web.

Conclusion: The emergence of sophisticated AI agents presents a classic "high-risk, high-reward" scenario. While their potential to autonomously solve complex problems is immense, the associated costs and security vulnerabilities are equally significant. Therefore, the path forward requires a dual focus: advancing agent capabilities through concepts like "deep agents" and domain-specific knowledge, while simultaneously building the essential guardrails—robust evaluation benchmarks and strict cost controls—to harness their power safely and sustainably.

Workflow Orchestration: N8n, FastMCP, and Beyond

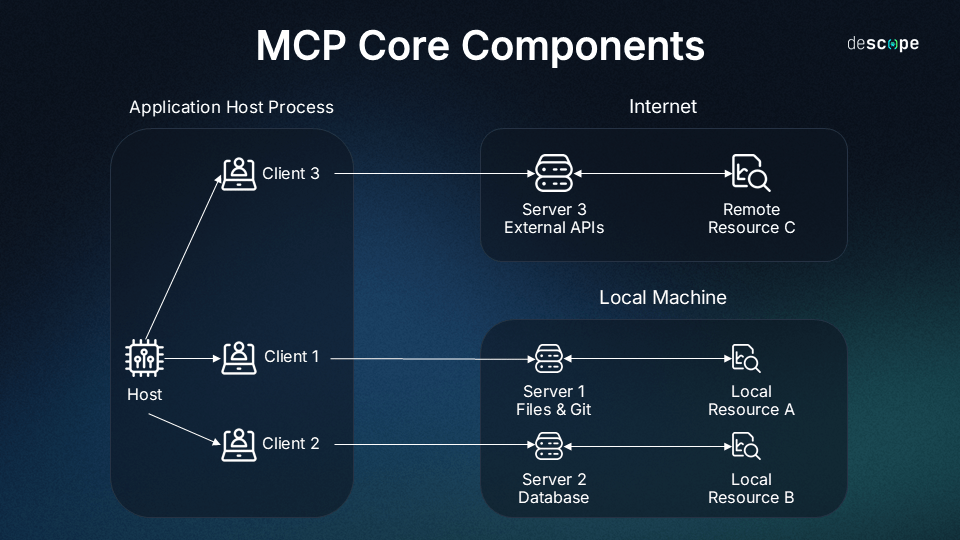

Rephrase: An MCP server (Model Context Protocol server) is a specialized service that securely exposes external tools, data sources, or functionalities—such as APIs, file systems, databases, or services like GitHub—to AI models (LLMs).

Prehprase:

Solves the M×N integration problem: Without MCP, developers must build custom connectors for every AI model–tool pairing. With MCP, any MCP-compatible AI client can plug into any MCP server—reducing effort and redundancy. [3, 4, 5]

Simplifies developer workflows: Acts like a "smart adapter" or "menu" for AI agents. The AI sees what capabilities are available (tools, data), and can request them without needing to know how they are implemented. [6, 7]

Enhances security and compliance: By managing access through well-defined servers, enterprises maintain control over data flow, reducing hallucination risks and data [8, 9]

Tools like N8n and FastMCP 2.0 are reshaping automation for AI engineering. Instead of scripting everything manually, you can design agentic pipelines with drag-and-drop logic.

For data scientists, this means faster prototyping, reproducible pipelines, and mixing human + AI steps seamlessly.

Private & Decentralized AI

As AI becomes embedded in our workflows, privacy matters more than ever. Google indexing ChatGPT conversations has raised alarms, pushing the discussion toward private AI.

1. Local AI (Edge Computing)

-

How it works: The AI model runs entirely on your device (laptop, phone, or private server) without sending data to the cloud.

-

Pros: Maximum privacy — nothing leaves your machine.

-

Cons: Limited by your device's compute power; big models may be slow or not fit in memory.

-

Examples:

-

Running LLaMA, Mistral, or GPT models locally with Ollama, LM Studio, or llama.cpp

-

Apple's and Google's on-device ML for mobile

-

2. Federated Learning

-

How it works: Many devices train the same model locally on their own data, and only share model updates (gradients), not raw data, with a central or decentralized coordinator.

-

Pros: Data stays local; useful for collaborative AI without pooling private data.

-

Cons: Updates can sometimes still leak information unless combined with differential privacy or secure aggregation.

-

Examples:

-

Google's Gboard predictive text training

-

Open-source tools like Flower or FedML

-

3. Fully Decentralized AI (Peer-to-Peer)

-

How it works: Model training or inference happens across a distributed network of nodes, often with encryption and consensus mechanisms.

-

Pros: No single point of control; potential for censorship resistance and privacy.

-

Cons: Still experimental, slower, and requires trust in node security and cryptographic protocols.

-

Examples:

-

OpenMined's PySyft for secure multiparty computation (SMPC)

-

Golem, Bittensor, and other blockchain-based AI markets

-

4. Privacy-Enhancing Computation (often combined with decentralization)

-

Techniques you can layer on top:

-

Homomorphic encryption — lets AI compute on encrypted data without decrypting it

-

Secure multiparty computation — splits the computation across nodes so no single node sees all the data

-

Differential privacy — adds statistical noise to prevent individual identification

-

RAG private options

Local Retrieval + Cloud LLM

-

Data indexed locally (Pinecone, Milvus, Chroma, etc.).

-

Query retrieves top-k docs → only those snippets are sent to the LLM.

-

Private since your full corpus never leaves.

-

-

Federated RAG

-

Multiple peers hold their own private databases.

-

Queries are broadcast securely, and only relevant snippets are shared.

-

Think of it like "federated search + privacy-preserving RAG."

-

-

Confidential Hybrid RAG

-

Using confidential computing (e.g., NVIDIA's H100 with trusted enclaves, or Intel SGX), retrieval and embedding can happen on encrypted data.

-

Even if using a cloud service, the provider can't see the raw inputs.

-

Conclusion: Private and decentralized AI is not just a technical preference but a security for private company data. It protects sensitive information, reduces dependence on centralized providers, and empowers communities with greater transparency and resilience. By decentralizing intelligence, we create AI systems that are trustworthy and respect privacy.

Superintelligence & Timelines

Finally, any forward-looking AI blog must touch on the superintelligence horizon. Predictions vary, but as systems like GPT-5 grow in reasoning capacity and multi-agent coordination emerges, we may be closer to autonomous AI ecosystems than expected.

Conclusion: Engineering the Next AI Era

From vibe analysis to swarm coding, from private AI to decentralized networks, we're entering an era where human thinking might be augmented.

The challenge is to stay playful, responsible, and innovative while balancing costs, privacy, and long-term impact.

The future of AI engineering isn't just technical—it's cultural, ethical, and deeply human.